artwork by Gemini 3 Pro

Journey of AI-Led FoodInsight Development with BMAD

FoodInsight

Part 3 of 3What if a software project’s most productive engineer never wrote a single line of code themselves? FoodInsight proves this isn’t hypothetical. With 29 stories, 98 story points, and approximately 5000 lines of code delivered in 3 days with only 20 hours of human oversight, this edge AI food monitoring system demonstrates what becomes possible when AI agents drive the entire development lifecycle.

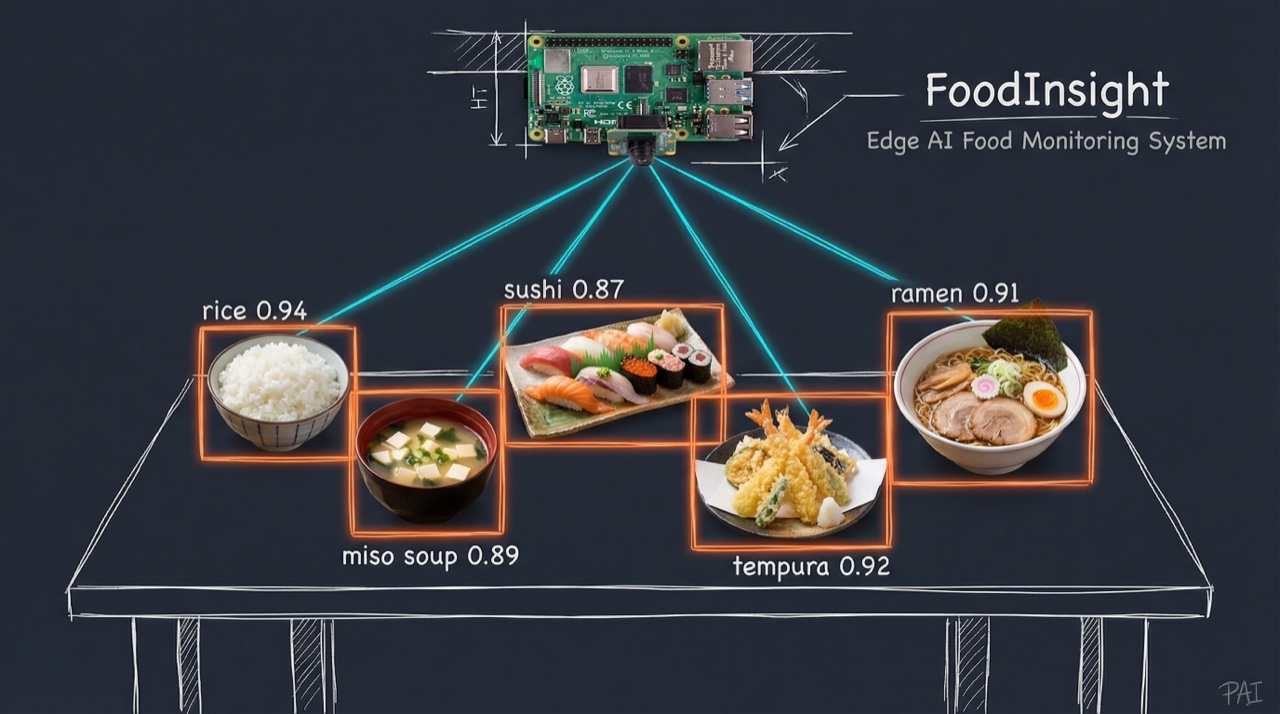

Figure. Edge AI food detection: A Raspberry Pi camera monitors a dining table, detecting Japanese dishes in real-time with YOLO11. Each dish receives a bounding box and confidence score, all processed locally.

This article captures the complete development journey of FoodInsight, an edge AI food monitoring system built entirely through AI-led software development. From requirements gathering to implementation, AI agents orchestrated the entire lifecycle, demonstrating a new paradigm in human-AI collaborative engineering.

The Problem

Office break rooms and cafeterias share a common frustration: nobody knows what snacks are available until they walk over. Manual inventory tracking is tedious and quickly abandoned. Existing smart solutions require cloud dependencies, raising privacy concerns about camera footage leaving the premises.

The challenge: build a food monitoring system that is smart enough to detect items automatically, private enough to keep all images local, and simple enough to run on inexpensive edge hardware.

The Vision

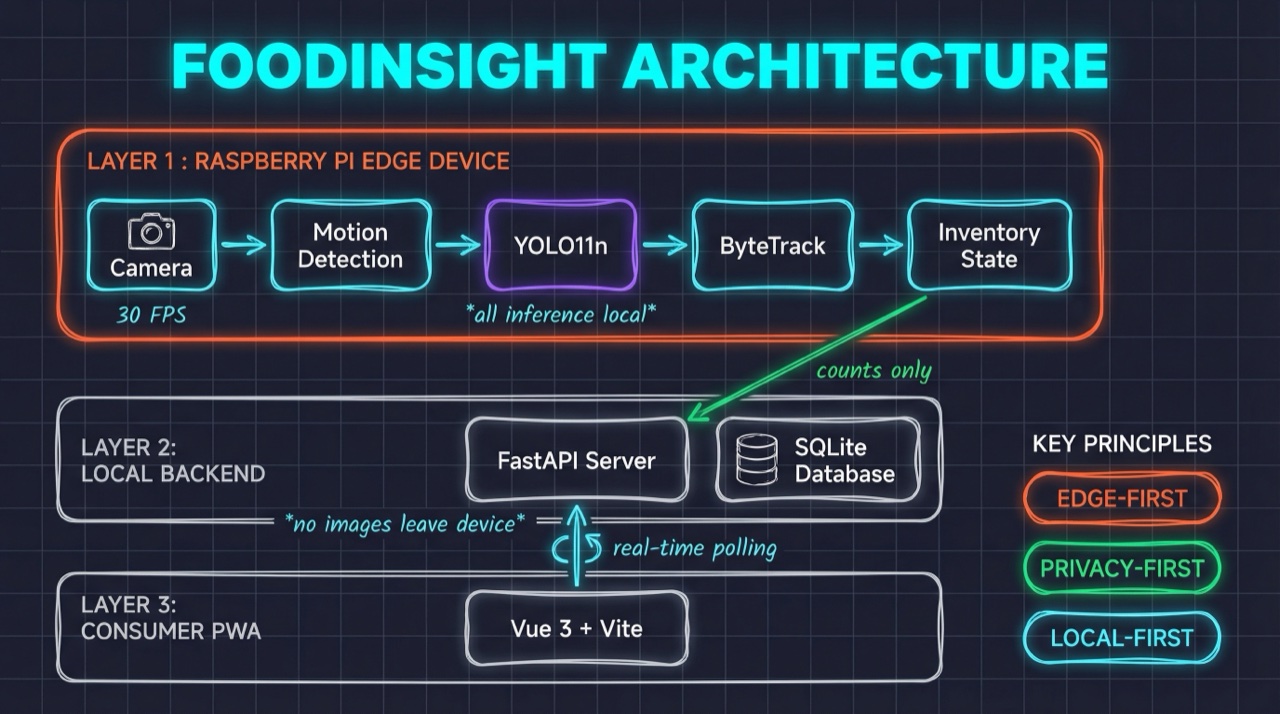

FoodInsight is a privacy-first, local-first food inventory monitoring system using YOLO11 object detection on Raspberry Pi edge devices. The system detects food items in real-time, tracks inventory changes, and provides a consumer-facing PWA for checking availability.

Key Design Principles:

- Edge-First: All inference happens locally on Raspberry Pi

- Privacy-First: Only metadata (counts, not images) leaves the device

- Local-First: SQLite database, no cloud dependencies required

Figure. Local-first architecture: Camera frames flow through motion detection and YOLO11n inference on the edge device. Only inventory deltas (counts, not images) push to the local SQLite backend. The PWA polls for updates.

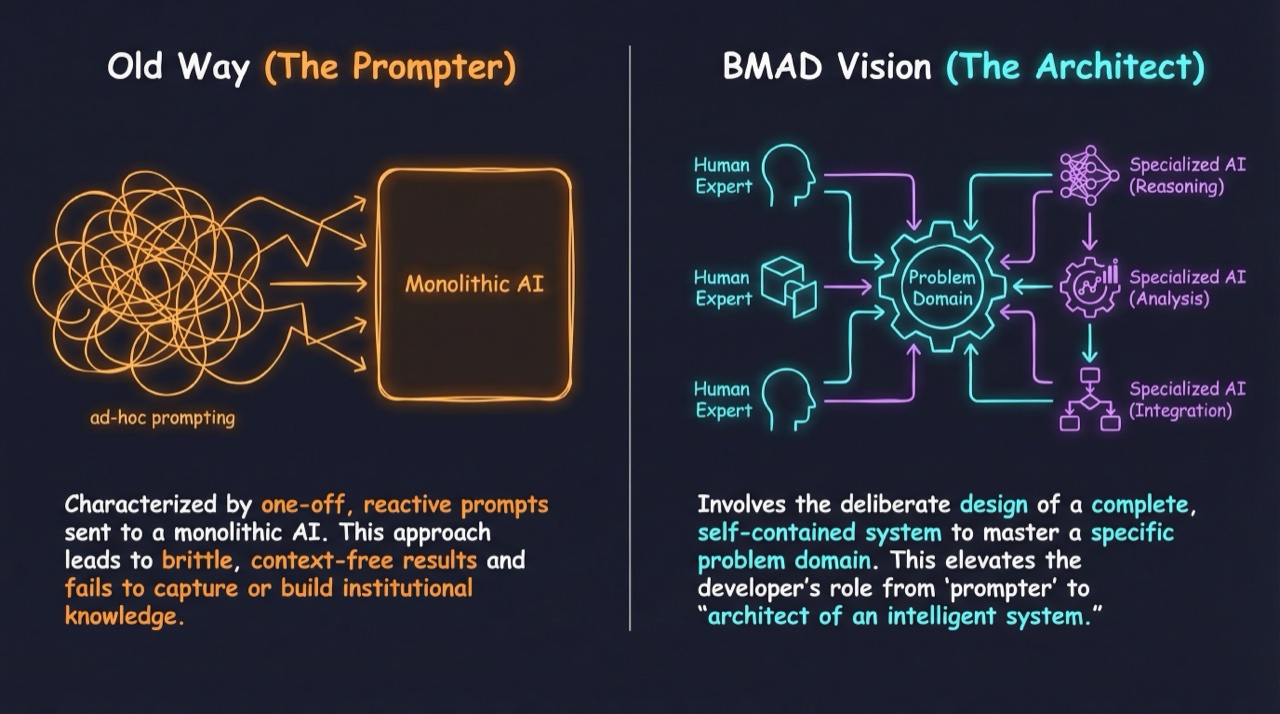

But building a system this complex with AI agents requires more than just prompting. Without structure, we’d face the very black-box problem that makes enterprise teams wary of AI-generated code. It requires a structured methodology. Enter BMAD.

Why BMAD? The Abstraction-Control Trade-off

Enterprise leaders investing in AI-assisted development often find that promised productivity gains come at a steep price: loss of governance, traceability, and architectural integrity. Unstructured, prompt-driven AI creates “black box” codebases that are difficult to maintain, audit, and scale.

Figure. The paradigm shift: Ad-hoc prompting to a monolithic AI produces brittle, context-free results. BMAD elevates developers from “prompters” to “architects of intelligent systems,” orchestrating specialized AI agents around a problem domain.

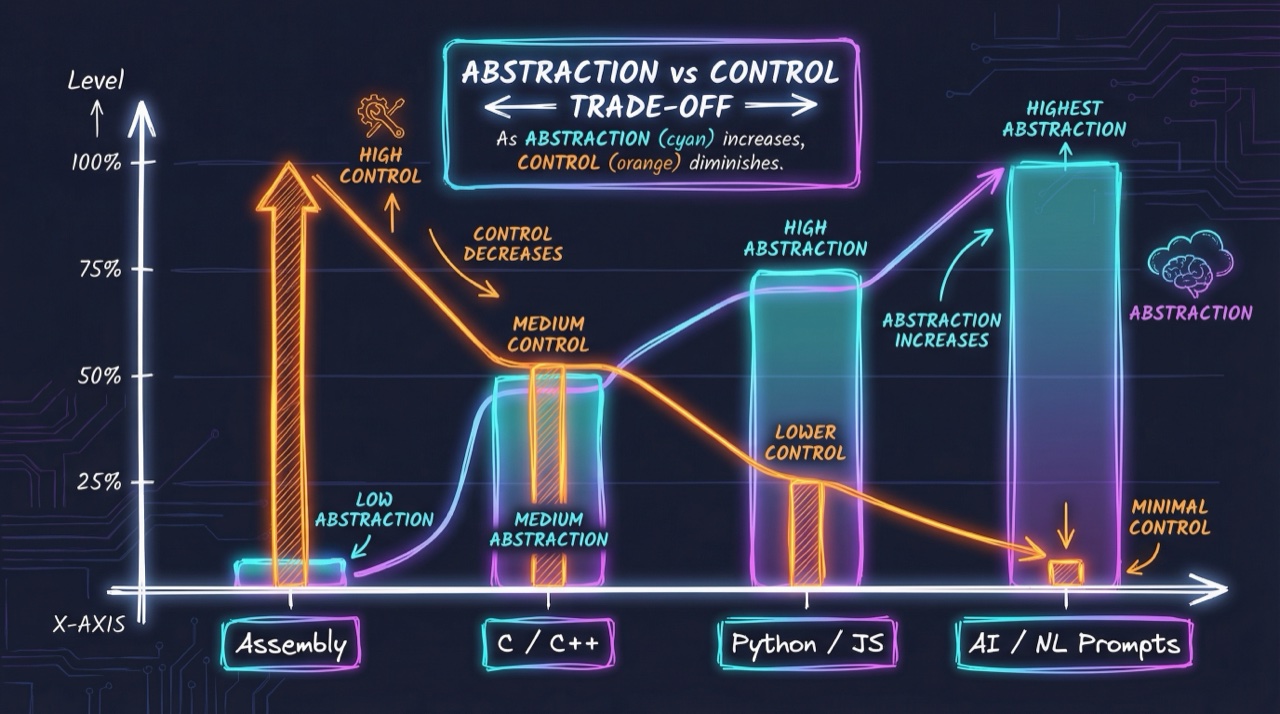

The Abstraction Trap

As programming abstractions increase, from assembly to high-level languages to natural language prompts, developers gain speed but face corresponding loss of control. With AI-generated code, this trade-off deepens significantly.

Figure. Higher abstraction accelerates development but sacrifices control. AI tools amplify speed at the cost of precision and accountability.

The philosophical underpinning, as Harrison Ainsworth’s “Tractatus Computo Philosophicus” states: “Software is a logical construction, and its correctness must ultimately be left to human judgement” [1]. Current AI tools, while powerful, create a noticeable loss of control as abstraction increases, leaving developers struggling to ensure precision, track intent, and confidently modify AI-generated solutions.

BMAD: From Chaos to Clarity

The BMAD Method (Breakthrough Method for Agile AI-Driven Development) provides the crucial framework to re-establish control [2]. It leverages specialized AI agents, each embodying a specific role: Analyst, Product Manager, Architect, Scrum Master, Product Owner, Developer, and QA.

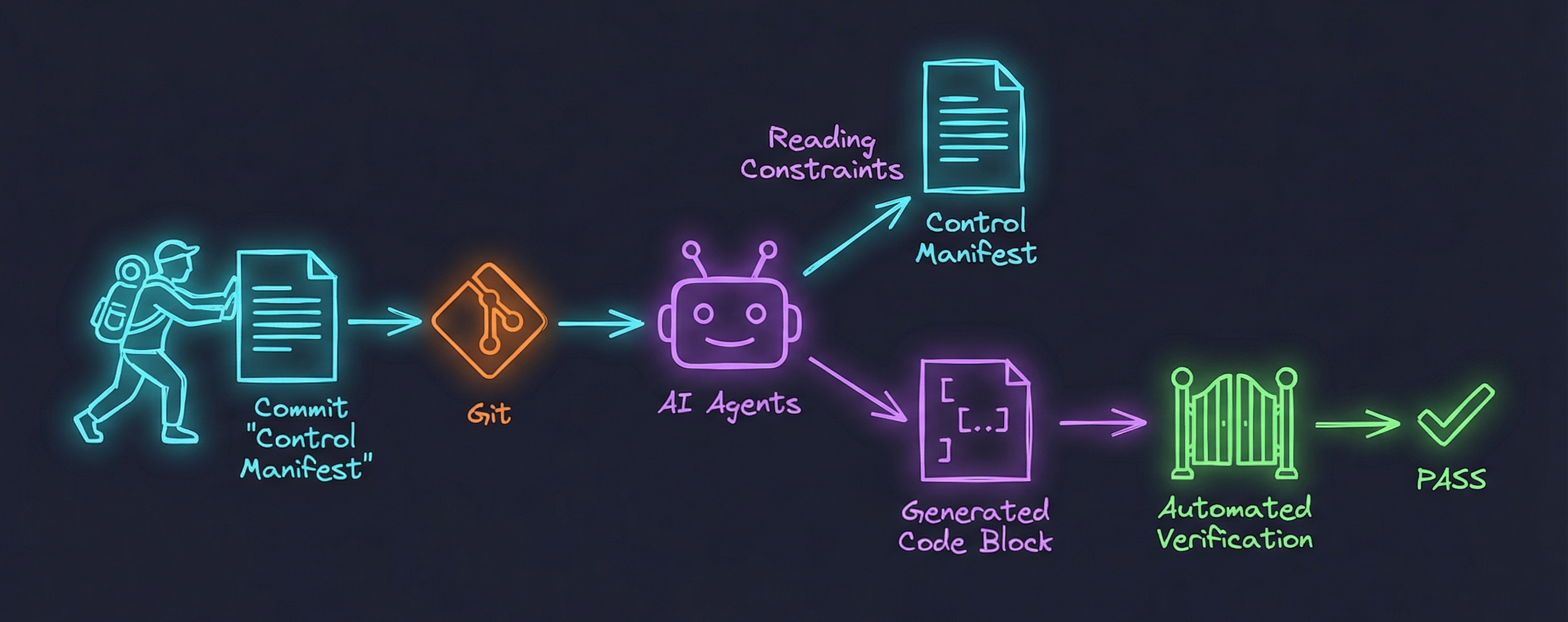

The true transformation lies in overlaying this agentic process with robust Git-based versioning. Every artifact, from PRD to architecture to granular stories, is treated as a versioned asset:

| Benefit | Description |

|---|---|

| Traceability | Every change from human or AI is tracked, eliminating the black-box effect |

| Collaborative Review | Stories undergo rigorous PR reviews where humans inspect AI-generated content |

| Single Source of Truth | All artifacts versioned and centralized; entire team operates from consistent blueprint |

| Enhanced Productivity | Automated creation of high-fidelity artifacts accelerates development cycles |

Figure. The control loop: Humans commit control manifests (PRDs, architecture docs, story specs) to Git. AI agents read these constraints when generating code, which then passes through automated verification. Human-authored artifacts govern AI behavior.

FoodInsight serves as a practical validation of this theoretical framework. The following sections document how BMAD agents drove the complete development lifecycle.

AI Agents in the Development Process

With the methodology established, who exactly built FoodInsight? Not human developers writing code, but a team of specialized AI agents, each with distinct expertise and personality. The development leveraged the BMAD workflow system, where these agents with distinct personas drove each phase of development [2].

Figure. The SDLC team gathered in their scrum room: from Mary’s analytical insights to Sally’s user advocacy, each specialist brings distinct expertise to the software development lifecycle.

| Agent | Name | What Makes Them Unique |

|---|---|---|

| analyst | Mary | Uncovers hidden requirements through systematic investigation |

| architect | Winston | Designs systems for longevity, balancing elegance with constraints |

| dev | Amelia | Translates architecture into production-grade code |

| pm | John | Investigative strategist who validates feature requests as hypotheses |

| po | Sarah | Bridges stakeholders and dev teams with ruthless prioritization |

| sm | Bob | Facilitates team processes, removes blockers, keeps sprints focused |

| ux-expert | Sally | Advocates for users with interfaces matching mental models |

Phase 1: Requirements & Analysis

Mary the Analyst led the initial requirements gathering:

| Deliverable | Agent | Output |

|---|---|---|

| Product Requirements Document | Mary (Analyst) | FoodInsight PRD.md |

| User Stories & Acceptance Criteria | Mary + Sarah (PO) | Epic breakdown |

| Market Analysis | Mary | Competitive landscape |

The PRD captured:

- Problem statement (manual inventory tracking inefficiency)

- Target users (office managers, cafeteria staff)

- Core features (detection, tracking, alerts)

- Success metrics (accuracy, latency, user adoption)

Phase 2: Architecture & Design

Winston the Architect designed the technical foundation:

| Deliverable | Agent | Output |

|---|---|---|

| Solution Architecture | Winston (Architect) | FoodInsight Solution Architecture.md |

| System Components | Winston | Detection pipeline, API design |

| Technology Selection | Winston | YOLO11n, FastAPI, Vue 3, SQLite |

Sally the UX Designer created the user experience:

| Deliverable | Agent | Output |

|---|---|---|

| UX Specification | Sally (UX Expert) | FoodInsight UX Specification.md |

| Design System | Sally | “Playful & Vibrant” theme |

| Component Library | Sally | Cards, badges, animations |

Phase 3: Epic Breakdown

John the PM and Sarah the PO decomposed the work into 6 epics:

| Epic | Stories | Points | Focus |

|---|---|---|---|

| Epic 1 | 6 | 21 | Edge Detection System |

| Epic 2 | 5 | 18 | Backend & Consumer App |

| Epic 3 | 4 | 13 | UX Foundation |

| Epic 4 | 4 | 13 | UX Delight (animations) |

| Epic 5 | 4 | 11 | UX Polish (accessibility) |

| Epic 6 | 6 | 22 | Edge Admin UX Revamp |

Total: 29 stories, 98 story points

Implementation with Parallel Engineer Agents

Here’s where AI-led development gets interesting. Instead of one agent tackling stories sequentially, we analyzed dependencies and unleashed multiple agents simultaneously. The result? Three days of sequential work compressed into one.

The implementation phase used Claude Code’s parallel agent architecture to maximize efficiency [3]. Multiple engineer agents worked simultaneously on independent stories.

Dependency Graph Analysis

Before launching parallel work, the system analyzed story dependencies:

Epic 6 Dependency Graph:

E6-S1 (Design Tokens) ──┬── E6-S2 (Status Cards)

├── E6-S3 (Camera Preview)

└── E6-S4 (Dark Mode)

│

E6-S2 + E6-S3 ──────────────── E6-S5 (Responsive Layout)

│

E6-S5 ─────────────────────── E6-S6 (Accessibility)

Parallel Execution Strategy

Wave 1: E6-S1 (Design Tokens) - Foundation

Wave 2: E6-S2, E6-S3, E6-S4 - Parallel (3 agents)

Wave 3: E6-S5 (Responsive) - Depends on Wave 2

Wave 4: E6-S6 (Accessibility) - Final integration

This approach reduced total implementation time by executing independent stories concurrently.

Iterative Refinement Cycle

Human feedback drove rapid iterations:

Example: Status Card Optimization

Initial Implementation:

- Full-height status cards with large fonts

- Excessive vertical space consumption

User Feedback: “Status cards are too tall, taking up too much vertical space”

AI Response:

/* Before */

.status-card { padding: var(--space-lg); }

.status-card__value { font-size: 2.5rem; }

/* After */

.status-card { padding: var(--space-sm) var(--space-md); }

.status-card__value { font-size: 1.5rem; }

Example: Layout Reorganization

User Feedback: “Put Device Information above Camera Preview”

AI Response: Restructured HTML layout, moved static content above dynamic camera section, improving information hierarchy.

Example: Emoji Enhancement

User Feedback: “Always showing popcorn emoji is boring”

AI Response: Expanded emoji mapping from 12 to 60+ food items with case-insensitive lookup. What surprised us most was the speed: a one-line complaint translated into a 60+ item lookup table within minutes. No tickets filed, no spec written, just immediate response:

const emojiMap: Record<string, string> = {

// Snacks

chips: '🥔', donut: '🍩', cookie: '🍪',

// Drinks

bottle: '🍶', water: '💧', soda: '🥤',

// Fruits

apple: '🍎', banana: '🍌', orange: '🍊',

// ... 60+ mappings

default: '🍿'

}

Notice the pattern across these examples: human feedback focused on what to change, never how. The AI handled implementation details, leaving humans free to focus on user experience and design intent.

Technical Implementation Highlights

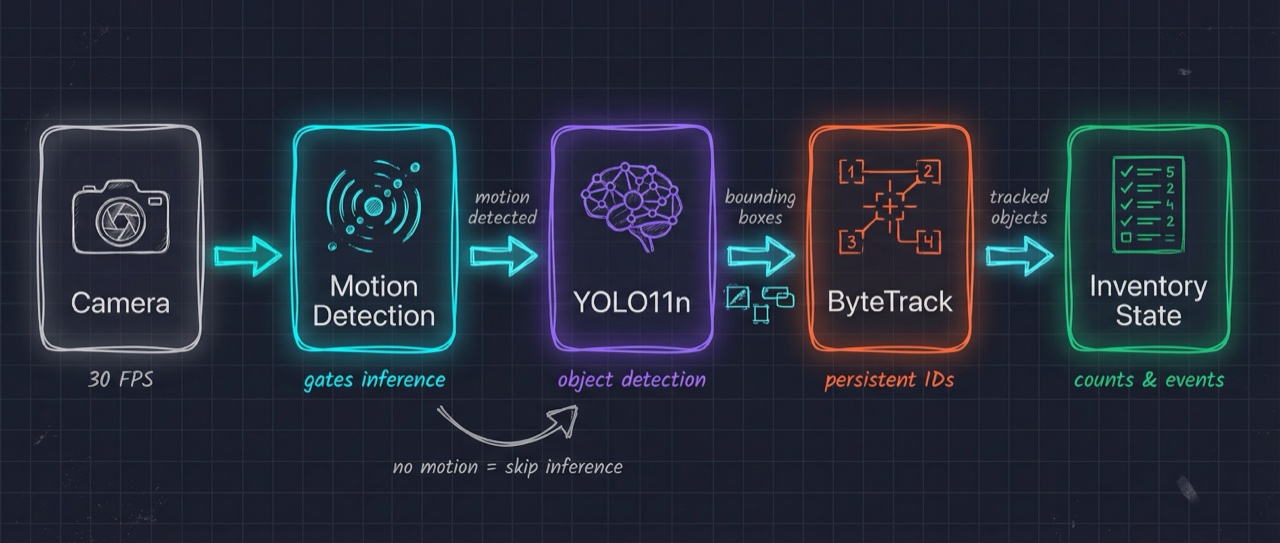

Edge Detection Pipeline

Figure. Camera frames at 30 FPS flow through motion detection, which gates expensive YOLO11n inference. Only frames with detected motion proceed to object detection, then ByteTrack for persistence, and finally inventory state management.

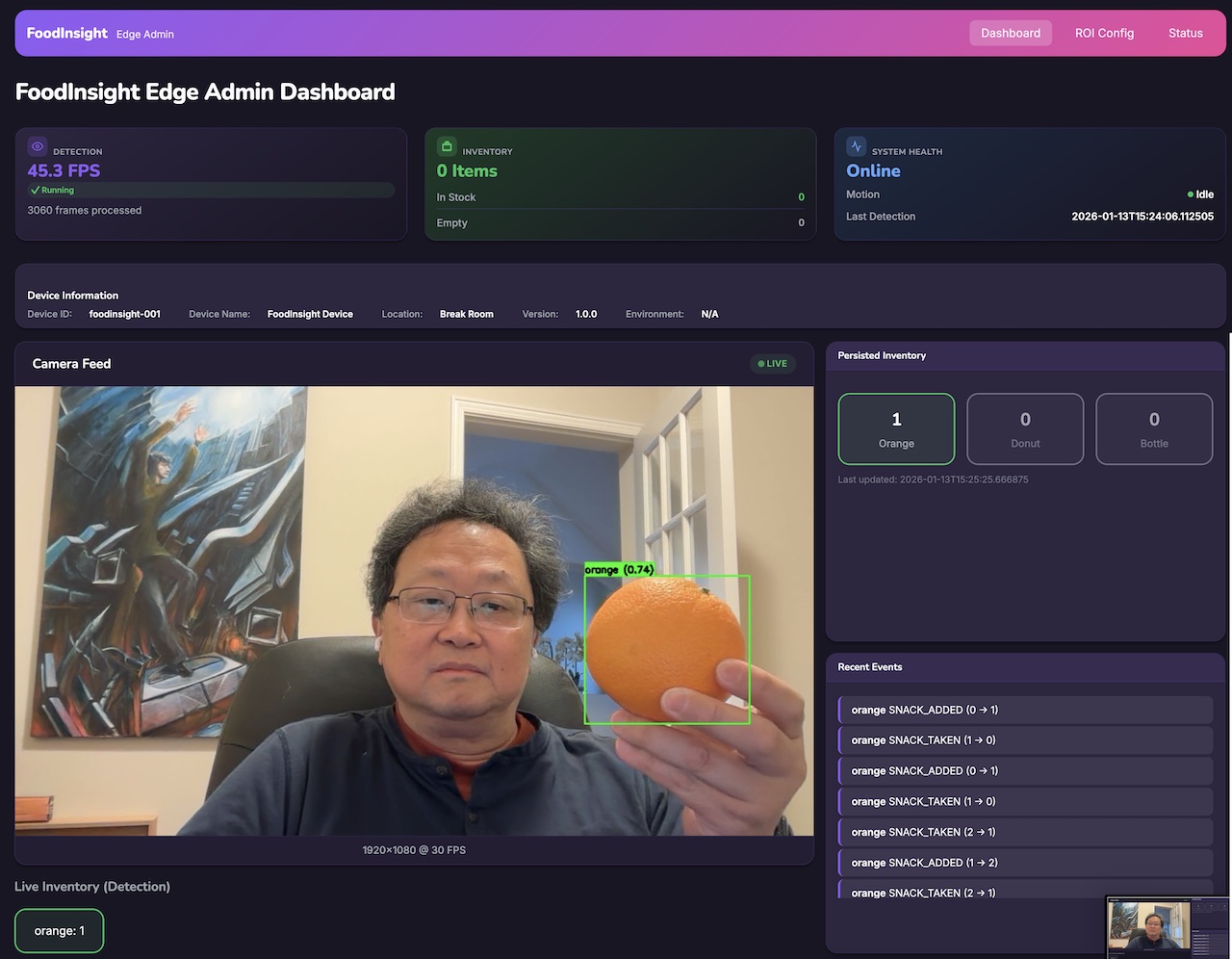

Admin Portal (Flask + HTMX)

- Real-time status cards with HTMX polling

- Live MJPEG camera preview

- ROI configuration UI

- Dark mode support via CSS custom properties

Figure. Edge Admin Dashboard: Real-time detection view showing camera feed with YOLO11 bounding boxes, compact status cards, device information, persisted inventory counts, and recent events log.

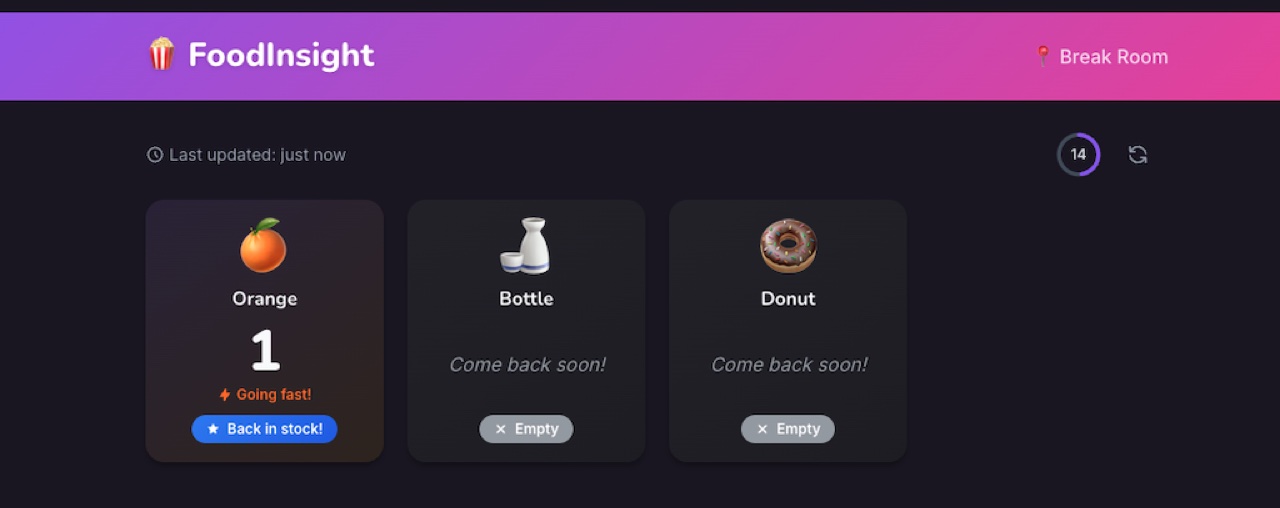

Consumer PWA (Vue 3 + Vite)

- Pinia state management

- Real-time inventory polling

- Animated status badges

- “Back in Stock” celebrations

Figure. Consumer PWA showing available inventory: Real-time display of detected items with counts, status badges, and food-specific emoji icons. Green borders indicate items in stock.

Design System

CSS custom properties for consistent theming:

:root {

--snack-purple: #8b5cf6;

--fresh-green: #10b981;

--warning-orange: #f59e0b;

--space-sm: 8px;

--space-md: 16px;

--radius-lg: 16px;

}

Documentation & Knowledge Sharing

AI agents drove not just code but comprehensive documentation. They treated knowledge capture as an integral part of the SDLC.

Project Documentation

| Document | Purpose |

|---|---|

| README.md | Project overview, quick start, API reference |

| Server README | FastAPI backend documentation |

| App README | Vue 3 PWA documentation |

| Epic Stories | Detailed implementation specifications |

Screenshot Integration

Real screenshots from running components were captured and integrated:

- Admin Dashboard (detection overlay, status cards)

- ROI Configuration UI

- Consumer PWA (in-stock and out-of-stock states)

Technical Blog Posts

Two companion blog posts were authored to share the journey with the broader community:

- From YOLO v2 to YOLO11: Food Detection Evolution [4]

- Training custom food detection models

- Dataset preparation (UECFOOD100)

- Performance comparisons across YOLO versions

- FoodInsight: Edge AI Food Monitoring [5]

- Complete system architecture

- Privacy-first design principles

- Implementation guide

Key Learnings

What Worked Well

- BMAD Agent Personas: Distinct agent roles (Analyst, Architect, UX) produced focused, high-quality artifacts

- Parallel Execution: Dependency-aware parallelization significantly reduced implementation time

- Iterative Feedback: Rapid human feedback cycles enabled quick refinement

- Documentation-First: PRD and Architecture docs provided clear implementation guidance

Challenges & Solutions

| Challenge | Solution |

|---|---|

| Agent context limits | Structured handoffs with clear summaries |

| Consistency across agents | Design tokens and style guides |

| Integration testing | End-to-end test scenarios in stories |

AI-Human Collaboration Pattern

Human: High-level intent ("make cards thinner")

↓

AI: Technical translation (reduce padding, font-size)

↓

Human: Visual validation (review changes)

↓

AI: Commit and document

Project Outcomes

Metrics

| Metric | AI-Led | Traditional Estimate |

|---|---|---|

| Total Stories | 29 | 29 |

| Story Points | 98 | 98 |

| Components | 3 (Edge, Backend, PWA) | 3 |

| Lines of Code | ~5000+ | ~5000+ |

| Development Duration | ~3 days | ~6-8 weeks |

| Human Hours | ~20 hours | ~400+ hours |

| AI Agent Sessions | 50+ | N/A |

The stark contrast in human hours, 20 versus 400+, represents the core value proposition. Humans shifted from writing code to directing AI agents and validating outputs.

Artifacts Produced

- Product Requirements Document

- Solution Architecture

- UX Specification

- 6 Epic specifications with 29 stories

- Full implementation (Python, TypeScript, CSS)

- Documentation and README files

- 2 technical blog posts

Replicating This Approach

For teams wanting to adopt AI-led development:

-

Start with BMAD Agents: Use Mary (Analyst) for requirements before any coding. The PRD becomes the contract between human intent and AI execution.

-

Version Everything: PRD, architecture, stories, and code all go in Git. This creates the audit trail that makes AI-generated artifacts trustworthy.

-

Map Dependencies First: Before launching parallel agents, understand which stories depend on others. Parallel execution without dependency awareness creates integration nightmares.

-

Keep Human in the Loop: Visual validation catches issues AI misses. The pattern is: AI proposes, Human reviews, AI refines.

-

Iterate Rapidly: Small feedback cycles (“make cards thinner”) beat big upfront specs. AI excels at rapid iteration when given clear, focused direction.

-

Document as You Go: Treat documentation as part of the SDLC, not an afterthought. AI agents can generate README files, API docs, and blog posts alongside code.

What If We Hadn’t Used BMAD?

What would have happened without the structured methodology? We’d likely have a working prototype, but with no architectural documentation, inconsistent code patterns across components, and the dreaded “it works but nobody knows why” problem.

The PRD and Architecture docs became our safety net. Every AI-generated line of code traced back to human-validated requirements. When we needed to understand why a component worked a certain way, the answer was in Git, not in someone’s head.

Without BMAD’s agent personas, we’d also lose the specialized focus. Mary’s analyst perspective caught requirements that a generic “code this feature” prompt would miss. Winston’s architectural rigor prevented the accumulation of technical debt that plagues rapid prototypes. The personas weren’t just roleplay; they were structured thinking applied to each phase.

Concluding Remarks

FoodInsight demonstrates that AI agents can effectively drive the entire software development lifecycle when properly orchestrated. The BMAD workflow system provided structure, while parallel engineer agents enabled efficient implementation. Human oversight remained essential for validation and creative direction, but the heavy lifting of analysis, design, and coding was successfully delegated to AI.

The FoodInsight journey suggests a new division of labor: AI agents handle the mechanical aspects of software development, including analysis, design, coding, and documentation, while humans provide creative direction, quality judgment, and domain expertise.

“This is not AI replacing developers; it’s AI amplifying what developers can accomplish.”

This project serves as a template for future AI-led development initiatives, showing how specialized agent personas, clear documentation, and iterative feedback loops combine to produce production-quality software.

References

[1] Harrison Ainsworth. Tractatus Computo Philosophicus. HXA7241, 2010.

- Philosophical framework on software as logical construction requiring human judgement

[2] BMad Code. BMAD Method. GitHub, 2025.

- The Breakthrough Method for Agile AI-Driven Development framework

[3] Anthropic. Claude Code. Anthropic, 2025.

- CLI-based AI coding assistant with parallel agent capabilities

[4] Benny Cheung. From YOLO v2 to YOLO11: Food Detection Evolution. Benny’s Mind Hack, 2026.

- Training custom food detection models with YOLO versions

[5] Benny Cheung. FoodInsight: Edge AI Food Monitoring. Benny’s Mind Hack, 2026.

- Complete system architecture and implementation guide

[6] Benny Cheung. Applied BMAD: Reclaiming Control in AI Development. Benny’s Mind Hack, 2025.

- Practical application of BMAD methodology

[7] Benny Cheung. Harmonizing Two AI Agent Systems: A Practical Journey. Benny’s Mind Hack, 2025.

- Integrating BMAD with Personal AI Infrastructure

[8] Daniel Miessler. Building a Personal AI Infrastructure (PAI). Daniel Miessler Blog, 2025.

- Foundational concepts for personal AI infrastructure

[9] FoodInsight Project. GitHub Repository. GitHub, 2026.

- Complete source code for the FoodInsight system