artwork by Clara Cheung

Dify - Your Weekend GenAI Magics

When we think about learning, especially in fields as complex as AI and the blooming arena of generative AI, there’s a natural inclination to dive deep into theory. Books, papers, lectures – these are the traditional tools of knowledge acquisition. They’re valuable, no doubt, but they represent only one side of the coin. The real magic happens when we take that theoretical knowledge and apply it to real-world projects. This is where the abstract becomes tangible, where ideas transform into something you can see, touch, and interact with.

Generative AI, and particularly tools like ChatGPT, have revolutionized this process. Traditionally, the journey from concept to execution in AI was daunting. It could stretch over months or years, acting as a significant barrier to innovation and broader adoption of AI technologies. But now, with the advent of Large Language Models (LLMs), we’re witnessing a dramatic shift.

[2024/10/04] We can listen to this article as a Podcast Discussion, which is generated by Google’s Experimental NotebookLM. The AI hosts are both funny and insightful.

images/dify-your-weekend-genai-magics/Podcast_Dify_GenAI_Magic.mp3

Figure. Knowledge Gaps in Education (image by qorrectassess.com)

ChatGPT and similar tools offer a new paradigm for turning ideas into reality. Through what’s known as prompt engineering, we can now interact with these complex AI models using natural language, describing what we want in plain English, and the model generates or completes our thoughts in a structured, repeatable manner. This isn’t just a minor improvement; it’s a fundamental change in how we approach AI development.

However, a significant hurdle remains. Transforming these ingeniously crafted prompts into a viable product is not straightforward. Often, a single prompt isn’t enough to accomplish a task; it requires a sequence of coordinated prompts. This necessitates additional tools and platforms to turn the envisioned product into reality.

Fortunately, this challenge is not unique and has been recognized by many. After years of research and practical development by members from Tencent Cloud CODING DevOps team, a solution has emerged that promises to bridge this gap effectively. Among various platforms and tools designed to facilitate the transition from concept to product in the generative AI space, Dify stands out as a particularly innovative solution.

NOTE: The practicality and popularity of Dify are evidenced by the impressive milestone it achieved within just 36 hours of its launch, during which over 1,500 applications were created using this open-source project.

Dify (Do It For Yourselves) is carving out a unique development space. It’s not just another tool in the vast digital toolbox; Dify is a transformative ecosystem that caters to a wide array of users—from those taking their first steps in AI to seasoned developers and large enterprises. This platform stands out for its versatility and robustness, offering solutions across education, prototyping, agent development, and production. Let’s understand how Dify is making a significant impact in these areas.

Figure. Dify as a platform for many aspects: education, proof of concepts, agentic product and deploying product.

Dify as an Education Platform for Learning Hands-on Generative AI Technologies and Implementation Techniques

Generative AI technologies, in their infancy, present a landscape both vast and uncharted. At first glance, the complexity might seem daunting. Yet, the essence of understanding and utilizing these technologies lies not in mastering a steep learning curve overnight but in embracing a journey of incremental discovery.

At its core, Dify serves as an educational gateway to the world of generative AI technologies. Dify can fill the gap of education by presenting a visual learning tools. What sets Dify apart is its Visual AI apps orchestration studio, also known as Low Code/No Code method, a feature that allows users to visually piece together AI components into functional applications. This significantly lowers the barrier to entry for individuals without deep programming knowledge, making it an exemplary educational tool. The platform’s Retrieval-Augmented Generation (RAG) pipeline further enriches the learning experience by equipping AI applications with a vast vector database, enabling them to produce accurate and contextually relevant outputs. This feature effectively demonstrates how AI can leverage extensive resources to generate informed responses, providing a practical glimpse into advanced AI functionalities in action.

Building RAG in 5 minutes

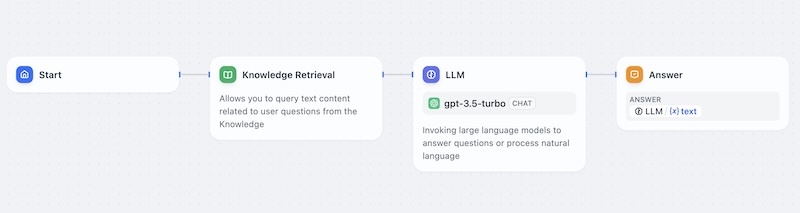

The following illustrates a simple RAG pipeline by using Dify workflow components,

Figure. Using Dify’s Knowledge Retrieval + Chatbot template, it illustrates a RAG pipeline that can be implemented in 5 minutes.

We can start with Dify’s Knowledge Retrieval + Chatbot template to get started. Let’s explore how this workflow template functions using the components of Dify, specifically focusing on the simplest form of RAG as depicted:

- Start:

- This is the initial trigger point of the workflow. It represents the beginning of a process where the AI application starts its operation, typically initiated by a user query or an automated event.

- Knowledge Retrieval:

- This component is key to the RAG setup. It allows the system to query a vast database or knowledge base to retrieve text content that is relevant to the user’s question. The purpose of this step is to gather all necessary information that might help in generating a well-informed response. It ensures that the AI has access to a broader set of data beyond its pre-trained model’s knowledge.

- LLM:

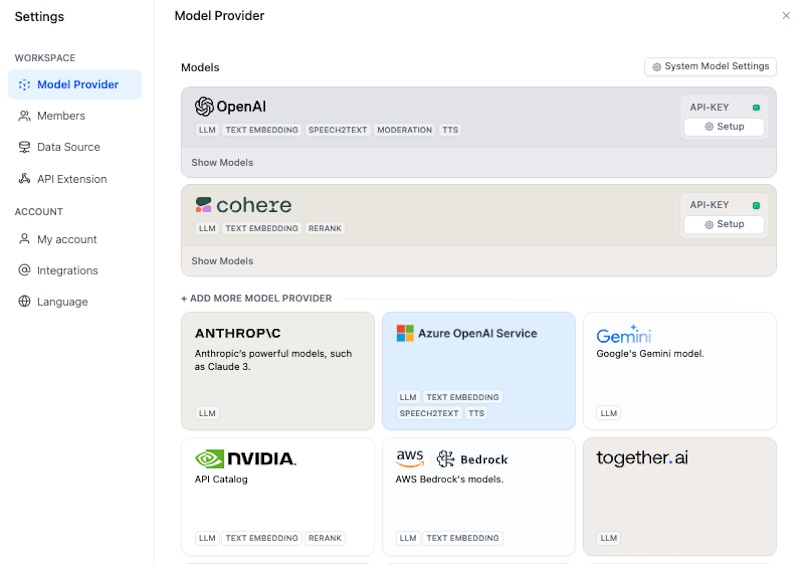

- Here, the workflow invokes a powerful language model, in this case,

gpt-3.5-turbo. Dify has many other LLMs connectivity available in the settings. This model uses the information retrieved in the previous step to understand the context and generate an appropriate response. The model processes natural language inputs and synthesizes information to formulate responses that are not only relevant but also contextually enriched by the retrieved knowledge.

- Here, the workflow invokes a powerful language model, in this case,

- Answer:

- The final output of the workflow is generated in this step. The answer module takes the response from the LLM and delivers it as text to the end user. This can be a direct answer to a user’s query, a completion of a task, or any other type of response that the application is designed to provide.

Figure. Dify has many other LLMs connectivity available in the settings. Usually each model will require a API key to access the service.

Enhanced Learning Experience with RAG:

How Dify workflow helps in an educational or learning context? This RAG pipeline facilitates a deeper understanding of how AI can dynamically utilize extensive databases to improve the relevance and accuracy of its responses. This setup demonstrates advanced AI functionalities in action, such as:

- Dynamic Data Retrieval: The ability to pull in data as needed from a vast repository enhances the AI’s responses, making them more aligned with the current context or specific user queries.

- Contextual Understanding and Response Generation: By using a state-of-the-art LLM, the system can understand nuances and generate responses that are not only factually accurate but also contextually appropriate.

- Real-time Learning and Adaptation: The workflow illustrates how AI can effectively use retrieved information to adapt its responses to new information or evolving contexts.

Dify Prompt IDE

Additionally, Dify’s Prompt IDE shines as a tool for mastering prompt engineering—a crucial skill in shaping AI responses. This environment enables users to experiment with various prompts, observe the immediate effects of their adjustments, and grasp the subtleties of directing AI to achieve specific outcomes.

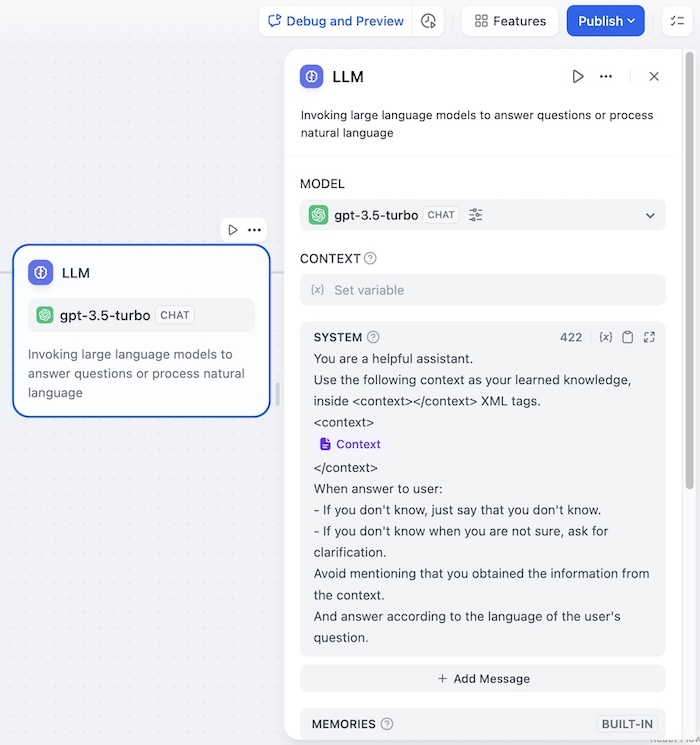

Figure. Expanding the LLM workflow component, we can interactively craft the prompt to summarize the retrieved context.

The picture showcases a detailed view of a LLM component within the Dify workflow, specifically focusing on the configuration of a LLM, to process and respond to natural language queries. This example is particularly insightful for understanding how to use Dify’s Prompt IDE to enhance prompt engineering techniques. Here’s a breakdown and elaboration of the features and functionalities shown in the image:

Overview of the LLM Component

- Model Selection:

- The component is set to use

gpt-3.5-turbo, which is a powerful version of OpenAI’s generative pre-trained transformers. We can choose another model provider that has been configured in the setting.

- The component is set to use

- Invocation Method:

- The model is invoked in a chat setting, which means it is optimized for conversational use, processing input and generating responses that are suitable for a dialogue format.

Configuration Details

- Context Variable:

- The “Set variable” feature allows the workflow to store and pass contextual information that the LLM can use while generating responses. This is crucial for maintaining context across interactions in a conversation.

- System Instructions:

- A detailed prompt instructions provided in the system configuration guides how the LLM should handle the information:

- It mentions using learned knowledge encapsulated within

<context></context>XML tags. The model can reference dynamically provided context to enhance its responses. - The instructions specify behaviors such as admitting lack of knowledge or asking for clarifications, which are essential for creating realistic and reliable AI interactions.

- The model is advised to avoid mentioning the source of its knowledge (the context), making the interaction seamless and more human-like.

Utilizing the Prompt IDE

The Prompt IDE in Dify allows users to interactively craft and refine the prompts that are fed into the LLM. This tool is essential for mastering prompt engineering, as it enables users to:

- Experiment with Different Prompts: Users can test various ways of framing questions or prompts to see how they influence the AI’s response. This is critical for optimizing the AI to handle specific types of queries or to perform tasks according to different user requirements.

- Observe Immediate Effects: Any changes made to the prompts can be immediately tested to observe how they alter the AI’s output. This immediate feedback is invaluable for understanding and improving the interaction dynamics between the user and the AI.

- Understand Subtleties: By tweaking prompts and observing outcomes, users can learn the subtleties of how certain phrasings or contextual clues can significantly change the nature of the AI’s responses.

This environment not only empowers developers to build more refined AI-driven applications but also serves as a hands-on learning tool for those new to AI, helping them understand the impact of linguistic nuances and context in AI interactions. Through such detailed configurations and tools, Dify enhances the capability of users to develop advanced, context-aware AI systems that are robust, responsive, and highly functional in real-world scenarios.

Dify as a PoC Platform for Rapid Prototyping and Experimentation with Ideas

For innovators and developers eager to transform their ideas into reality, Dify offers an invaluable resource for rapid prototyping and experimentation. The platform’s combination of Built-in Tools and Api-Based Tools streamlines the development process, allowing for swift iterations and refinements. This supportive environment fosters creative experimentation and facilitates the quick transition of concepts into tangible prototypes.

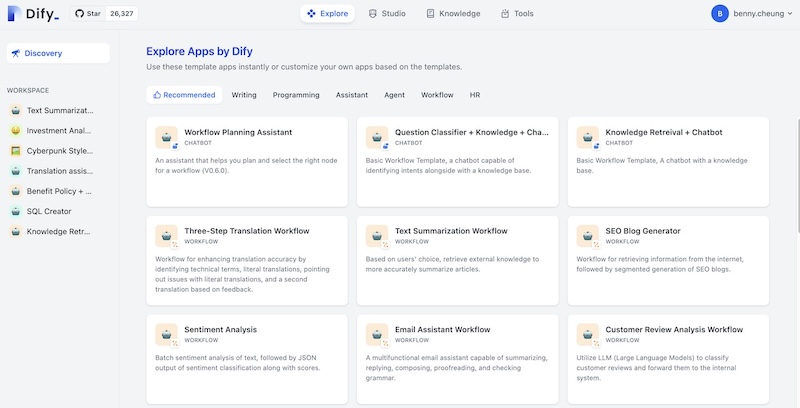

Figure. Illustrate Dify has come with wide range of workspace template, which helps to rapidly kick started a project.

This is important for an environment to support testing and validating hypotheses about how different components work together within AI applications. The ease with which users can assemble, disassemble, and reconfigure application elements encourages creative experimentation and allows for the rapid evolution of ideas from concept to prototype.

Creating Dify Apps - User Guides

Dify offers a suite of tutorials that guide users through the creation of popular generative AI applications. These tutorials are designed to harness the capabilities of LLMs in practical, user-friendly applications.

- Agent Assistant

- This tool taps into the reasoning power of LLMs to autonomously set goals, break down complex tasks, navigate tools, and refine processes to achieve tasks independently. It’s a leap towards making AI an active participant in problem-solving.

- Chat App

- This application facilitates a seamless conversation with users, operating on a straightforward question-and-answer basis. It’s designed to engage users in continuous dialogue, making interactions with AI more natural and intuitive.

- Text Generator

- The generator application stands out for its ability to produce high-quality text across various formats, from article summaries to translations, all based on user prompts. This application showcases the potential of AI in creative and content generation tasks, offering a glimpse into the future of automated writing.

Through these tutorials, Dify not only demystifies the process of creating generative AI applications but also opens up a world of possibilities for users to explore and innovate with AI technology.

Dify as an Agent Platform for Learning Agentic Component Construction and Prompt Engineering Techniques

As an agent platform, Dify is at the forefront of teaching users how to construct agentic components—self-operating units capable of executing tasks autonomously. The platform’s Agent Assistant feature leverages the power of LLMs to perform complex assignments independently, from conducting detailed analyses to creating comprehensive reports.

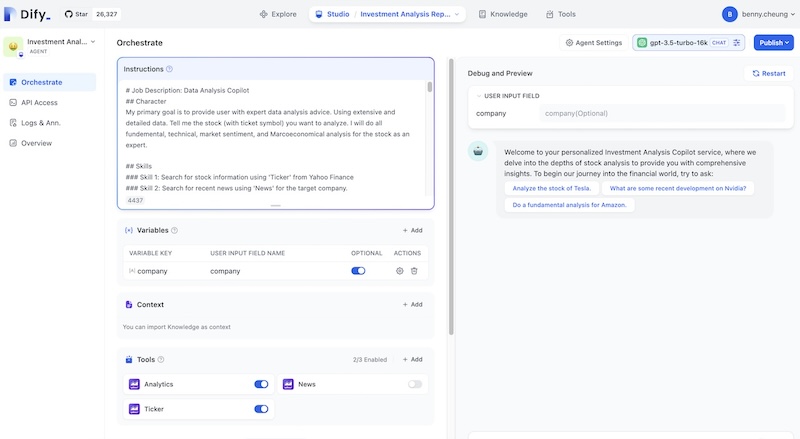

Figure. Example of a assistant agent application constructed completely in the Dify Studio with UI, the only pre-requisite is idea and high-level understanding of GenAI, no coding experience needed.

The Dify’s agent interface is specifically tailored for creating assistant agent, for example an Investment Analysis Copilot, which is a specialized AI agent designed to provide expert data analysis on stocks. Here’s a detailed breakdown of the various components and functionalities depicted in the interface:

- Instructions Section:

- Here, a detailed job description and the character of the agent are defined. The agent is described as a data analysis copilot with expertise in various analytical areas, such as fundamental, technical, and market sentiment analysis.

- Skills are clearly defined, which helps in structuring the agent’s capabilities. For example, the ability to search for stock information using a ‘Ticker’ from Yahoo Finance or searching for recent news about the target company.

- Variables:

- Variables like ‘company’ are defined here, which can be used to capture user input and influence the agent’s behavior and responses based on dynamic input.

- Context:

- The section hints at the ability to import knowledge as context, which would provide the AI with additional information to base its analyses and responses on, enhancing the accuracy and relevance of its output.

- Tools:

- Tools such as Yahoo’s “Analytics”, “News” and “Ticker” are shown, showing what built-in capabilities the agent can leverage to perform its tasks. These tools are designed to connect to data sources and APIs to fetch the required information.

- Debug and Preview Pane:

- This area on the right shows a preview of the user interface as it would appear to end-users. It provides sample interactions that potential users might have with the AI, such as analyzing specific stocks, asking for recent developments on a company, or conducting fundamental analysis. This is crucial for testing and refining how the agent interacts with users.

Agent Tools and Their Integration

Within the project Dify, tools for Agents and Workflows are neatly categorized into two distinct types. The first, known as Built-in Tools, are ingrained directly into the product’s core. They’re pre-coded, ready to be utilized without any additional tinkering. The second type, Api-Based Tools, stands on the shoulders of third-party APIs.

- Built-in Tools: These are integral parts of the platform and are pre-coded to perform specific functions like pulling stock data or latest news, ready to be deployed without additional coding.

- API-Based Tools: These tools are designed for integration with external services and can be customized or extended through APIs. They allow the agent to perform more complex and varied tasks, enhancing its utility and adaptability.

These tools are designed for ease, requiring no direct coding from the user’s end. Integration is as simple as providing interface definitions through familiar formats such as OpenAPI, Swagger, or even the OpenAI-plugin. This bifurcation not only simplifies the user experience but also broadens the scope of customization and functionality available within Dify’s ecosystem.

Dify as a Production Platform for Deploying and Monitoring AI Products

When we take on the topic of deploying generative AI applications, the complexity can seem daunting, especially for those new to the field. This is a section that dives deep into these intricacies, but it’s tailored for those with a solid grasp of the subject. For newcomers, it’s safe to bypass this for now.

In the production requirements of deployment and monitoring, Dify provides comprehensive solutions through its LLMOps capabilities. The platform’s Backend-as-a-Service (BaaS) offerings simplify the integration of AI functionalities into diverse products, facilitating seamless transitions from the development phase to full-scale deployment.

Quick start

We can clone the project from Dify’s github repository.

Once we cloned the repository. The easiest way to start the Dify server is to run our docker-compose.yml file. Before running the installation command, make sure that Docker and Docker Compose are installed on your machine:

cd dify/docker

docker compose up -d

One of the best thing with open source is that we can access the internals and understand how it works, without relying on the fragmented information released by a product company. The features are continuously to grow with a healthy community. If we are development enabled, nothing can stop us to extend the platform by our own codes.

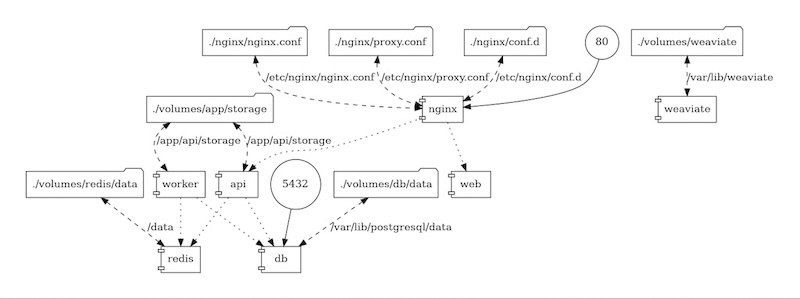

Docker deployment architecture

The architecture of a system is deployed using Docker, involving multiple services and volumes, and showing the relationships between them. This is a robust setup typical for modern web applications where separation of concerns and data persistence are crucial. The use of Docker and Docker Compose helps in managing these services in an isolated but networked manner, making development, deployment, and scaling more efficient.

Figure. Dify docker compose deployment architecture (image by Dify.ai).

- Nginx: Acts as the reverse proxy and web server. Configuration files are shown:

nginx/nginx.conf: Main configuration file./etc/nginx/nginx.conf: The mounted volume to persist or share configuration across containers.nginx/conf.d: Directory for additional configuration files.

- Weaviate: A vector search engine, configured with a volume for data storage:

/volumes/weaviate: Data storage for Weaviate./var/lib/weaviate: Mount point inside the Weaviate container for its data.

- PostgreSQL (

db): Database service with its data volume:/volumes/db/data: Data storage for PostgreSQL./var/lib/postgresql/data: Mount point inside the PostgreSQL container.

- Redis: In-memory data structure store, used as a database, cache, and message broker:

/volumes/redis/data: Data storage for Redis.

- API (

api): The backend API service which uses PostgreSQL and Redis:/app/api/storage: The volume for storing data relevant to the API.

- Worker (

worker): A background worker that interacts with both the API and the databases (PostgreSQL and Redis). - Web (

web): A front-end web service which communicates with the API.

Volume Mapping:

- Configuration files and data for Nginx, PostgreSQL, Redis, and Weaviate are stored in Docker volumes, which are directories on the host that are mounted into containers. This setup ensures data persistence across container restarts and sharing configuration among different containers.

Service Interactions:

- Nginx routes external requests to the appropriate containers/services, like the API or web interfaces.

- API connects to PostgreSQL for data storage and retrieval, and might also interact with Redis for caching or session storage.

- Worker processes might handle asynchronous tasks that involve heavy lifting or background processing, interacting with both the API and the databases.

This is what the docker compose shows when it completed,

... 40.6s

[+] Running 9/9

✔ Network docker_default Created 0.0s

✔ Container docker-weaviate-1 Started 0.2s

✔ Container docker-web-1 Started 0.2s

✔ Container docker-db-1 Started 0.2s

✔ Container docker-sandbox-1 Started 0.2s

✔ Container docker-redis-1 Started 0.2s

✔ Container docker-api-1 Started 0.0s

✔ Container docker-worker-1 Started 0.0s

✔ Container docker-nginx-1 Started 0.0s

After running, you can access the Dify dashboard in your browser at http://localhost/install and start the initialization process.

Upgrade Dify

Dify, being a vibrant and evolving entity with a community of contributors, undergoes updates with a rhythm almost as predictable as the ticking of a clock—weekly, to be precise.

To bring Dify to its latest state, one must navigate the digital landscape of its source code, specifically to the docker directory. This journey begins with a simple command that serves as our entry point: cd dify/docker.

cd dify/docker

git pull origin main

docker compose down

docker compose pull

docker compose up -d

Finally, with everything in place, docker compose up -d breathes life into the updated version of Dify. It’s a command that sets everything in motion, quietly working in the background (-d for detached mode), allowing us to continue our work uninterrupted while the new version takes its place.

The use of Docker for deployment architecture exemplifies Dify’s commitment to providing a scalable, manageable, and efficient production environment. This setup allows for easy scaling, robust data persistence, and separation of concerns—key components for modern, reliable web applications. Moreover, Dify’s open-source foundation encourages ongoing community contributions, ensuring the platform evolves in line with the latest developments in technology and user requirements.

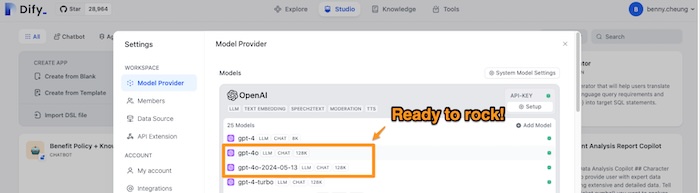

OpenAI GPT4-o Model

As of (2024-05-13), doing the Dify docker refresh and restart,

...

create mode 100644 api/core/model_runtime/model_providers/openai/llm/gpt-4o-2024-05-13.yaml

create mode 100644 api/core/model_runtime/model_providers/openai/llm/gpt-4o.yaml

...

Figure. The latest GPT4-o is available as one of the OpenAI models to use.

Expanding Dify Reaches - HTTP Request and Tools

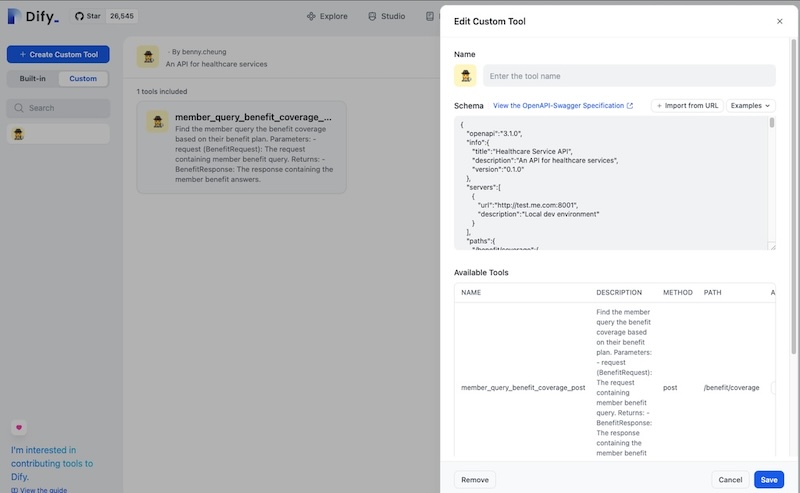

Dify presents a straightforward interface. A prominent “Create Custom Tool” button invites crafting something unique. This could be anything, but let’s consider a healthcare API tool designed for querying member benefit coverage. It’s a specific task, yet one that illustrates the platform’s capability to cater to niche requirements with ease.

The essence of Dify’s user experience is encapsulated in how it handles tool descriptions and schema. For instance, the healthcare tool mentioned doesn’t just float in the digital ether; it’s grounded by a clear purpose—enabling users to find member benefit coverage. The schema, adhering to the OpenAPI-Swagger Specification, outlines the structure of API calls in a language that’s both standardized and accessible. This means that even those not versed in the intricacies of API development can grasp the tool’s workings.

Figure. The “Create Custom Tool” is specifically showcasing the interface for creating and editing custom tools through the integration of APIs.

Endpoint configuration further demystifies the process, showing exactly how data is received and processed. And with server configurations pointing to a local dev environment, users get a sandbox for experimentation and testing—crucial steps before any rollout.

Technique to connect local API server

When working with Dify in a local development environment, the challenge often lies in making sure it can communicate with services running on the host machine. This is crucial for testing purposes. The process begins with identifying the host’s IP address, which acts as a bridge between Dify, running inside a Docker container, and the local service. By executing ifconfig, one might discover their IP to be something like 192.168.50.197.

With this information, configuring HTTP requests becomes straightforward. For instance, to interact with a service, one would direct requests to local dev server endpoint at http://192.168.50.197:8001/answer.

Similarly, to obtain the OpenAPI specification, which is essential for understanding the service’s capabilities, URLs like:

http://192.168.50.197:8001/docsfor interactive Swagger-UI.http://192.168.50.197:8001/openapi.jsonfor specification in JSON format are used.

An elegant enhancement to this setup involves the /etc/hosts file. By adding an entry such as

192.168.50.197 test.me.com

One can simplify access to the local service. This not only makes the URLs more readable but also mimics real-world DNS configurations, providing a more realistic environment for testing and development. This approach underscores the importance of seamless interaction between development tools and services, ensuring that testing is both efficient and reflective of actual deployment scenarios.

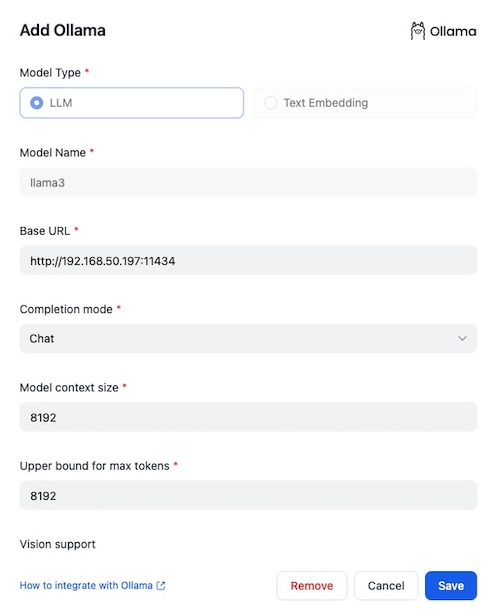

Connecting Dify to Local LLM

Integrating Dify with a local LLM through Ollama offers a streamlined approach to leveraging GPU acceleration for our models. Ollama, accessible via a straightforward CLI or a REST API, simplifies the interaction between our applications and the model. Starting is as easy as downloading and installing Ollama from their website.

For those utilizing macOS, pairing Ollama with Docker Desktop enhances the GPU acceleration capabilities. Launching a model, such as llama3, is done with a simple command. Ollama takes care of the rest, including downloading the model if it’s our first time using it. The llama3-8b model, for instance, is efficiently compressed to around 4GB for local use.

ollama run llama3

Refer to Ollama official site, it supports numerous open-source LLM models.

Dify’s integration with Ollama is seamless, designed to recognize Ollama as a model provider. When running Ollama on macOS, setting environment variables through launchctl is necessary for smooth operation. A quick restart of the Ollama application applies these settings.

launchctl setenv OLLAMA_HOST "0.0.0.0"

Finally, configuring Ollama within Dify completes the setup, making it ready for use. This integration not only simplifies the process but also optimizes the performance of local models through GPU acceleration.

Figure. Configure Dify Ollama as one of the model provider, which interface with the local IP address and port.

Concluding Remarks

When we encounter Dify, we’re not just stumbling upon another platform. We’re diving into an ecosystem that’s been professionally designed to support every phase of our journey with generative AI technologies. From the initial spark of learning to the final stages of production deployment, Dify stands as a beacon, guiding users through the often murky waters of AI development.

The beauty of Dify lies in its accessibility. It doesn’t matter if we’re a seasoned developer or someone who’s just starting to dabble in AI; Dify has something for everyone. It democratizes the process of creating with AI, making what once seemed like a daunting task approachable and, dare I say, enjoyable. This isn’t just about having a tool at our disposal. It’s about embracing an adventure—a weekend of exploration where we can bring our ideas to life with the magic of generative AI.

References

- Dify.ai - Dify is an open-source LLM app development platform. Its intuitive interface combines AI workflow, RAG pipeline, agent capabilities, model management, observability features and more, letting you quickly go from prototype to production.

- Dify.ai, Introducing Dify Workflow, Dify Insights Blog, 8 Apr 2024.

- Dify.ai, Easy-to-Use LLMOps Platform for Visually Creating and Operating Your AI Native Applications, Dify Insights Blog, 10 May 2023.

- Dify.ai, AI in Finance: Building an AI Investment Analysis Assistant with Dify, video, 18 Feb 2024.

- Xiao Yi, Unleashing AI in Finance: Building an AI Investment Analysis Assistant with Dify, Dify Insights, 26 Jan 2024.