artwork by DALL.E 3

Exploring LLMs Through Minsky's Lens on Universal Intelligence

Philosophy of AI

Part 2 of 6At the recent World Science Festival, the discussion titled “AI: Grappling with a New Kind of Intelligence” proposed the idea that AI could be seen as an evolving “being”, rather than merely a tool. This thought brought me back to a mesmerizing read from April 1985’s Byte magazine. The piece, “Communication with Alien Intelligence”, written by the AI visionary Marvin Minsky, exploring the possibility of reaching out to minds from beyond our world.

Minsky argues that intelligent beings, regardless of their origin, face similar constraints and would develop comparable concepts and problem-solving methods. He discusses principles like the economics of resource management and the sparseness of unique ideas, suggesting these are universal. Minsky also addresses potential limitations in understanding vastly different intelligences and emphasizes the importance of basic problem-solving elements in intelligent communication.

[2024/10/04] We can listen to this article as a Podcast Discussion, which is generated by Google’s Experimental NotebookLM. The AI hosts are both funny and insightful.

images/exploring-llms-on-universal-intelligence/Podcast_Minsky_Universal_Intelligence.mp3

Figure. An illustration that metaphorically represents a conversation between human intelligence and artificial intelligence.

This perspective allows us to rethink the capabilities of Large Language Models (LLMs) and how problem solving techniques could be applied to current constraints.

Universal Problem-Solving in AI

AI as a form of intelligence has the potential to compete with humans, by developing its current capabilities through principles of universal problem-solving.

Specifically, the concept of universal problem-solving in AI, particularly in the context of LLMs, could include:

- Pattern Recognition: This ability to detect and assimilate patterns is a core attribute of human intelligence. Its effectiveness is dependent on a robust memory architecture.

- Language Understanding: Comprehension of language involves the interpretation of words and symbols, allowing an AI or human to construct meaningful responses.

- Adaptation and Learning: In machine learning, adapting to new information through changes in behaviour is a data-driven and algorithmic way of progression.

These examples draw parallels between artificial and natural forms of problem-solving.

LLMs and the Economics of Resource Management

Expanding on Minsky’s discussion, LLMs can be viewed as systems that manage computational resources in order to optimize problem-solving. This involves balancing the allocation of computational power, memory, and data processing capabilities to efficiently process and generate language. Understanding the strategies LLMs use for resource management is key to understanding their operational mechanics and efficiency.

This involves making informed decisions about the allocation and utilization of limited assets to achieve specific goals efficiently and effectively. Intelligent systems, whether biological or artificial, must often operate within constraints - be it energy, time, materials, or computational power. This ability is not just about conserving resources but also about maximizing their potential impact, a crucial aspect of both human and artificial intelligence.

For instance, when an LLM processes a complex sentence, it must understand the context, maintain the flow of the conversation, and generate a relevant response. This requires a delicate balance of using enough resources to perform these tasks effectively, but not so much that it becomes inefficient. In the context of training LLMs, resource management involves effectively utilizing datasets and computational power. Adequate computational resources are critical for processing extensive datasets and experimenting with various model architectures. However, it is essential to note that avoiding overfitting or underfitting in these models primarily hinges on the quality and diversity of the training data, as well as the appropriate complexity of the model and the implementation of effective regularization techniques.

The Sparseness of Unique Ideas

“The Sparseness Principle” provides a framework for understanding the nature of intelligence as it pertains to the generation of unique ideas. This principle posits that the universe of possible computational structures is vast, yet the processes that yield significant outputs are few, leading to a natural scarcity of truly unique ideas.

Minsky’s Experiment on the Sparseness Principle

The Sparseness Principle: When relatively simple processes produce two similar things, those things will tend to be identical!

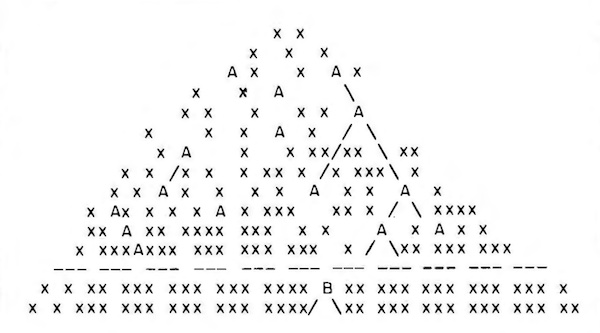

Figure. A universe of possible computational structures (image credit: Byte magazine, April 1985).

This original diagram represents a visual abstraction from Minsky’s technical experiment on the Sparseness Principle, which is closely tied to the concept of Turing machines as described by Alan Turing in 1936. The diagram illustrates the outcomes of an experiment where all possible computational processes — symbolized by Turing machines — are explored.

In the diagram:

- Each ‘X’ marks a process (or Turing machine) that failed to produce significant behavior. These processes either halted immediately, erased their input data, or entered an endless loop without performing any meaningful computation.

- The ‘A’s represent a small subset of these processes that all converged on a similar, non-trivial behavior. They performed what Minsky calls “counting” operations, which are simple yet non-trivial tasks that could be considered the precursors to arithmetic operations.

- The ‘B’ marks the emergence of a more complex machine, which occurs far less frequently. This B-machine represents an evolutionary leap in complexity from the A-machines.

Minsky’s experiment reveals that among the vast number of possible computational processes, most are unproductive or trivial (the ‘X’s), but a sparse few (the ‘A’s) tend to exhibit identical or similar useful behaviors. This consistency among the A-machines despite the diversity of the starting rules suggests an inherent efficiency in certain computational paths which lead to the development of basic arithmetic-like functions.

The illustration metaphorically suggests that in the early stages of computational (or cognitive) development, simpler and more efficient processes are more likely to emerge and be replicated.

This experiment underscores a foundational concept in both computer science and cognitive science: despite an astronomical number of possible processes or ideas, functional and efficient ones are rare but tend to be similar across different systems. These efficient processes form the building blocks of more complex operations, whether in computational machines or in the human mind, leading to the advanced forms of intelligence that we strive to understand.

The Limitations of LLMs

When we consider the generation of language and concepts by LLMs, we often find that these models produce outputs that seem familiar or derivative of existing ideas. This is because LLMs operate by parsing and recombining vast datasets of human language. They do not create in a vacuum but instead draw upon the collective pool of human knowledge encoded in their training data. As Minsky suggests, the rarity of unique ideas is due to the limited number of simple processes that can lead to significant and distinct outcomes. In the context of LLMs, this means that the apparent creativity of these models is often the result of remixing or repurposing existing information rather than creating something entirely new from scratch.

Consider the example of an LLM writing a poem. The model might generate verse that aligns with human poetic constructs, but it is doing so by following patterns it has learned rather than conjuring new forms of poetic expression. Similarly, if an LLM is tasked with solving a mathematical problem, it will apply algorithms and heuristics derived from existing mathematical knowledge. Its “solutions” are recombinations of established methods, not groundbreaking mathematical discoveries.

In both examples, the Sparseness Principle is at play. The LLMs reach into their finite set of rules—their programming and the data they’ve been trained on—and pull out combinations that are statistically likely to be successful based on past human endeavors. This iterative, combinatorial approach to problem-solving and content generation is a digital reflection of the principle Minsky describes. The outputs are constrained by the limits of their design and training, which, while vast, cannot encompass the true infinity of potential human creativity.

This understanding is crucial when considering the potential and boundaries of artificial intelligence. It highlights a fundamental truth about LLMs: they are powerful tools that can mimic human-like creativity and problem-solving, but they operate within a bounded set of possibilities defined by their human creators. The Sparseness Principle thus serves as a reminder of the inherent limitations of artificial systems in replicating the depth and originality of human thought and the extraordinary nature of true creativity and innovation.

Causes and Clauses

Marvin Minsky’s contemplations about CAUSES and CLAUSES, relating to the potential communication with alien intelligence, provide a fascinating parallel to understanding the intricacies of LLMs. Minsky suggests that our method of attributing causes to effects and compartmentalizing experiences into object-symbols, difference-symbols, and cause-symbols is not merely an evolutionary coincidence but a likely path of cognitive development. This implies that if alien intelligence evolved cognitively, they might share similar fundamental structures in their communication and thinking processes, potentially enabling us to find a common ground.

The concept of using clause-structures in human language, which allows us to build complex ideas from basic components, can be seen as a universal mechanism of intelligence. It is this mechanism that could be at play within LLMs as they process and generate language. However, LLMs’ understanding and generation are bound by the programming and data they have been fed, and their ‘clause-structures’ are predefined by their algorithms. While they can mimic the complexity of thought by chaining simpler components, they do not inherently ‘understand’ these structures as we do.

Explaining in the Cultural Context

Comparing an analytical breakdown into components, versus a more holistic perspective, can challenge us to think about the nature of intelligence and cognition beyond our human-centric viewpoint. It raises the question of whether our tendency to see the world in parts, which LLMs also mimic, is truly the most effective way of processing information. Perhaps, in the vastness of all conceivable ideas, there are forms of intelligence, be they alien or artificial, that perceive and understand in ways that are fundamentally different from ours and from the capabilities of our current AI systems. This notion broadens the scope of what we consider when developing and interacting with LLMs, pushing us to explore beyond our conventional frameworks and potentially paving the way for revolutionary advancements in AI.

Roar of AI: Understanding Beyond Language

While it is true that LLM plays a significant role in explaining itself, it is important to note that explainability is not limited to language alone. In the context of AI, explainability refers to the ability to understand and interpret the decisions made by the AI system. This can be achieved through a variety of techniques, including visualizations, feature importance analysis, not just limited by generating human-readable explanations.

Language can be a powerful tool for explaining things; it is not always necessary or sufficient for achieving explainability in AI. In some cases, visualizations or other non-linguistic techniques may be more effective in helping users understand how the AI system arrived at its decisions.

Figure. The image reflects Ludwig Wittgenstein’s concept as a metaphor for the dialogue between human and artificial intelligence, presented in the different dimensions.

The quote by Wittgenstein, “If a lion could speak, we could not understand him”, is often interpreted as a commentary on the limitations of language and the challenges of understanding different perspectives or ways of thinking. However, in the context of communicating with AI and making AI explainability, it is important to recognize that there are many different ways of achieving explainability, and language is just one of them. Ultimately, the goal of explainability in AI is to provide users with the information they need to understand and trust the decisions made by the AI system, regardless of the specific techniques used to achieve it.

Concluding Remarks

Marvin Minsky’s discourse on the limitations of understanding different intelligences is particularly relevant when considering communication with non-human intelligences, such as alien minds or artificial entities like LLMs. He proposes that our cognitive processes, reflected in language through nouns, verbs, and clauses, are not arbitrary but stem from evolutionary solutions to universal problems. These cognitive structures allow us to create complex ideas from simpler components, a process that may be a universal trait of intelligence.

This indicates that despite the diversity of thought processes across potential forms of intelligence, there could be a common foundation rooted in simplifying and structuring complex information. Consequently, if we were to encounter an alien intelligence, it might share some fundamental cognitive strategies, such as categorizing objects and actions, which could make communication possible. Conversely, if their cognition does not compartmentalize experiences into discrete units, this could pose significant challenges for mutual understanding. This speculation extends to the realm of AI, where LLMs might mimic these cognitive structures within their programming, thus bridging the gap between human and machine understanding. However, the sparseness of truly novel ideas and concepts remains a challenge, as both human and machine intelligences grapple with the limits of complexity and the finite nature of innovative combinations.

Resources

- Marvin Minsky, Communication with Alien Intelligence, Byte Magazine, Apr 1985.

- Published in Extraterrestrials: Science and Alien Intelligence (Edward Regis, Ed.) Cambridge University Press 1985. Also published in Byte Magazine, April 1985.

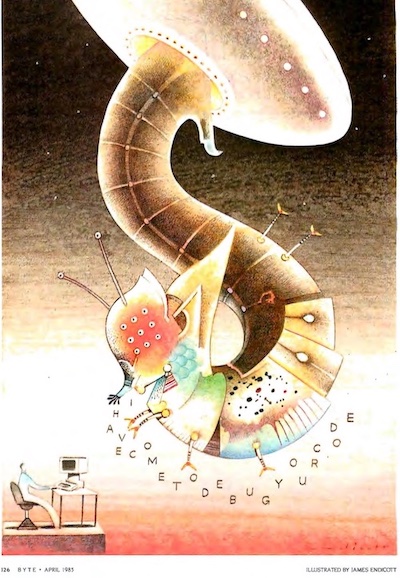

Figure. The illustration from Byte magazine’s original article elegantly captures the essence of how communication with an alien intelligence might unfold. This image sticked to my mind since then (image credit: Byte magazine, April 1985).

Books

-

Wittgenstein, Ludwig, and Gertrude Elizabeth Margaret Anscombe. Philosophical Investigations: The English Text of the Third Edition. Vol. 1. Prentice Hall, 1973.

-

Jobst Landgrebe & Barry Smith, Why Machines Will Never Rule the World: Artificial Intelligence without Fear, Routledge, 12 Aug 2022. ISBN: 978-1032309934

- The book’s core argument is that an artificial intelligence that could equal or exceed human intelligence―sometimes called artificial general intelligence (AGI)―is for mathematical reasons impossible. It offers two specific reasons for this claim:

- Human intelligence is a capability of a complex dynamic system―the human brain and central nervous system.

- Systems of this sort cannot be modelled mathematically in a way that allows them to operate inside a computer.

- The book’s core argument is that an artificial intelligence that could equal or exceed human intelligence―sometimes called artificial general intelligence (AGI)―is for mathematical reasons impossible. It offers two specific reasons for this claim:

Conferences

- World Science Festival, AI: Grappling with a New Kind of Intelligence, video, 24 Nov 2023.

- A novel intelligence has roared into the mainstream, sparking euphoric excitement as well as abject fear. Explore the landscape of possible futures in a brave new world of thinking machines, with the very leaders at the vanguard of artificial intelligence.

- In this inspiring two-hour discussion led by Brian Greene, distinguished AI researchers Yann LeCun, Sébastien Bubeck, and Tristan Harris take a deep dive into the history and current state of artificial intelligence, as well as its promising future. They offer well-grounded speculations on the emergence of a new kind of intelligence, shaping the conversation with their expertise and visionary perspectives.

Large Language Models (LLMs)

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, L., & Polosukhin, I., Attention is all you need, In Proceedings of the 31st International Conference on Neural Information Processing Systems, 2017.

- The seminal paper introducing the Transformer model, which forms the basis for many modern LLMs.

- Andrej Karpathy, Intro to Large Language Models, video, 22 Nov 2023.

- This is a 1 hour general-audience introduction to Large Language Models: the core technical component behind systems like ChatGPT, Claude, and Bard. What they are, where they are headed, comparisons and analogies to present-day operating systems, and some of the security-related challenges of this new computing paradigm.

- OpenAI’s Research Blog on Language Models

- A collection of articles and research papers from OpenAI, discussing various aspects of LLMs including GPT-4.